December 2022 Link spam update at Google. What does it mean for link building?

Google changes its systems countless times a year. While it keeps quiet about the vast majority of updates, it regularly informs us about the more significant ones and their focus. And so, on 14 December 2022, we knew that an update to combat link spam was in the works. The deployment was completed on 12/01/2023. Shoud you take any action?

Contents

TL;DR

- Don’t delete links, it’s a waste of money

- See who’s done better in SERP and what they’re doing

- Review your linking practices

Before I go any further, a few important facts and a note.

My business is done mainly in the Czech and Slovak market. Link building here´s quite different than in English speaking countries.

The update took a long time because it was interrupted over the holidays, something Google is said to do frequently, as Google’s Danny Sullivan confirmed to Mastodon.

The December 2022 link spam also update coincided with the helpful content update, which began on 5 December 2022 and ended on 12 January 2023.

Helpful content update started to roll out

The roll-out of the link spam update began

Helpful content update and Link spam update finished

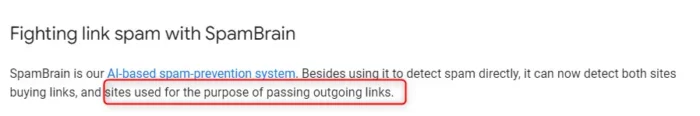

Impact assessment is therefore difficult, but I still think it is partly possible. Besides, this is not the first time that updates have overlapped. Google is also traditionally stingy with detailed commentary. It’s worth noting that in its comment on the update, it specifically mentioned sites that link out in the schemes. Unfortunately, we don’t know exactly what that means.

SpamBrain now hunts the links as well

Link spam detection is handled by a system called SpamBrain. It’s based on artificial intelligence, and Google has been using it to detect spammy sites since 2018. What’s new is that it’s now being used to detect link manipulation.

Such a system works continuously, it evaluates links all the time. If it finds links that it thinks are unnatural, it will invalidate them. In layman’s terms, they will no longer affect the rankings of the sites they may have helped up to that point. There is no way to “fix” something to make it work for you again.

And that’s where insights come in. Because thanks to tools, we can see ranking changes on other people’s sites. And we can start thinking about what’s changed and where the new boundaries are so that Google doesn’t see that you’re artificially linking, if that’s the case for you. Let me show you how to do this.

Is your site affected?

It may take some time before you notice a drop in traffic in the analytics system. Rank tracking counteracts this by giving you the ability to see changes in visibility in Google very quickly, and that’s where you should start. A few lines below you’ll learn how to do this in various tools.

If you’ve never done any active link building before, you can probably sleep well at night. But everyone else should at least do a basic quick tour of the data. So how do you do this audit?

Data in Ahrefs

Ahrefs, which regularly pulls results from search queries in Google for the millions of queries it has in its database, provides great data here. The main thing to look out for here is declines in the number of words per “position”; traffic estimates are secondary. But watch out for seasonal changes.

Google Search Console (GSC)

For your own sites, you can also look at data directly from Google. If your average position has deteriorated after this date, you know you probably have something to worry about. But I deliberately recommend GSC as the last one. In the case of a really large site, changes in average position may not show up in a big way. Also, the average position will decrease as more new content is added to the index.

So, have you noticed a drop? What should you do next?

Affected queries and URLs

To find out exactly what’s wrong, you need to find the content that lost the visibility. Anyone using any rank tracking tool will be at an advantage here.

In Ahrefs, the “patients” can be found under the “Organic Keywords” tab. Select here for comparative data:

- 15.1.2023

- 14.12.2023

And set the filter to “Position: rejected”.

Again, you’re more likely to be looking for those larger changes.

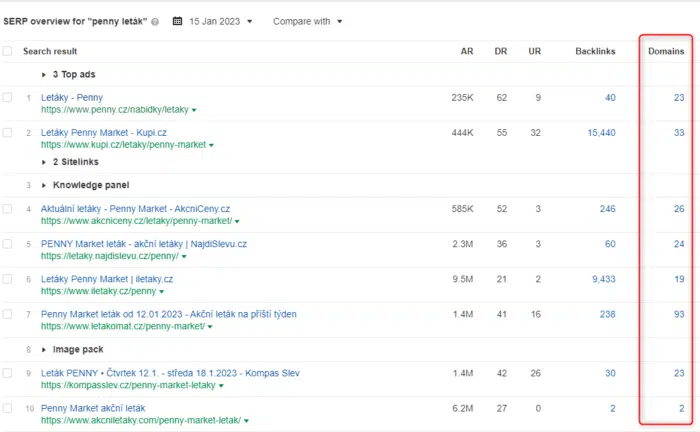

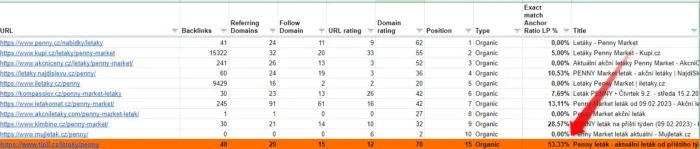

OK, we know the suspect queries and their landing pages. How do we proceed? I’m going to show you a specific case where you can’t do without an Ahrefs account. I have no relation to the example site.

- Search query: Penny leták (“penny flyer”)

- Change of position from 5 to 13

- Landing page tipli.cz/letaky/penny

In the Keyword Explorer, we look at a summary of Google results for this query. In the filter I disable the current top 10 and add my own URL tipli.cz/letaky/penny. The graph will show me that there has indeed been a drop around this date.

So I’m interested in how many links each URL currently in the top 10 on Google has. The numbers are in the low teens, Tipli has 26. That doesn’t seem suspicious to me, but these are all links, regardless of possible rel=nofollow for some of them (hey Ahrefs, a hint for UI update happening here🙂

Further insights could focus on the occurrence of the text of the search phrase in the text of the links:

- exact match

- partial match

And proportionally within the links to the URL itself or across the whole site. Note here it takes some amount of manuall work to get the proper data.

- Export all dofollow anchor texts

- Marking exact matches (I used OpenRefine, which takes both words from the query in any order as an exact match)

- Summarised in a table (you can see the patterns in the link below)

And the result?

If I look at the frequency of links that lead to URLs that are successful for the query “Penny Flyer” and compare them with Tipli, I see that:

- Tipli exact match: 53.33%

- The highest ratio in the current TOP10 is 28.57%

- 7 out of 10 are an order of magnitude lower

So if this is one of the signals – and I think it is easy to calculate – then Tipli is clearly showing that who is the black sheep of the group. Such links do not occur naturally. This is also true for some of the others in the dataset, but there is probably a line somewhere where SpamBrain has to stop – for now.

There can be many other criteria for evaluating a link profile, so evaluating drop-offs is actually quite difficult. This one might be quite easy to do.

How to adjust your link building practices?

I have been trying to deal with link patterns since 2013, when I started looking into penalties for unnatural links.

If your ‘opponent’ is a system that learns from big data by its very nature, it will be challenging. Big data models will show many of the patterns you create through your link building activity.

So take an honest look at how you get links:

- Do you link using certain content formats repeatedly (e.g. articles only)?

- Do you link with a preponderance of certain anchor text?

- Do you repeatedly link to the same URL?

- Do you repeat the same number of links in your articles?

- Are your links always in the same place in your articles?

- Are your activities jumping around?

- Is your content labelled as commercial?

- Are your links on sites where you yourself can see that the majority of the content is there to be linked to?

- Are certain tactics prevalent (e.g. editing existing content by inserting a link)?

If links grow naturally, their common feature is a certain degree of chaos. Patterns arise here too, but they don’t need to be layered as much. While many sites may link to a at once, they will use a very diverse range of different text to do so.

Conclusion

Let’s get this straight. Reverse learning is very difficult here. There is a lot of data, in Ahrefs you only get the data you need after a lot of manual cleaning, and we can only guess at the factors SpamBrain can use, not to mention the ways it can combine them. I can only recommend it in general, based on the rule “better safe than sorry”:

- have as many link tactics as possible

- limit or forget about “keywords” in link text altogether, or set a maximum number of keywords for each landing page

- don’t link in content that is labeled “commercial message”, “PR article”, etc.

- limit tactics that are easily recognizable (e.g. editing old articles without expanding on the original content

- monitor changes in positions after adding links to see what works

- talk about how a competitor who is ranking for “your” query with zero links is different

Sources you definitely have to read.

- https://developers.google.com/search/updates/helpful-content-update

- https://developers.google.com/search/docs/essentials/spam-policies#link-spam

- SurferSEO - write the best content for SEO

- Sitebulb - the BEST crawler

- SEO Testing - Google Search Console data in turbo mode

- GPT for Sheets™ and Docs™